The Uptime Engineer

👋 Hi, I am Yoshik Karnawat

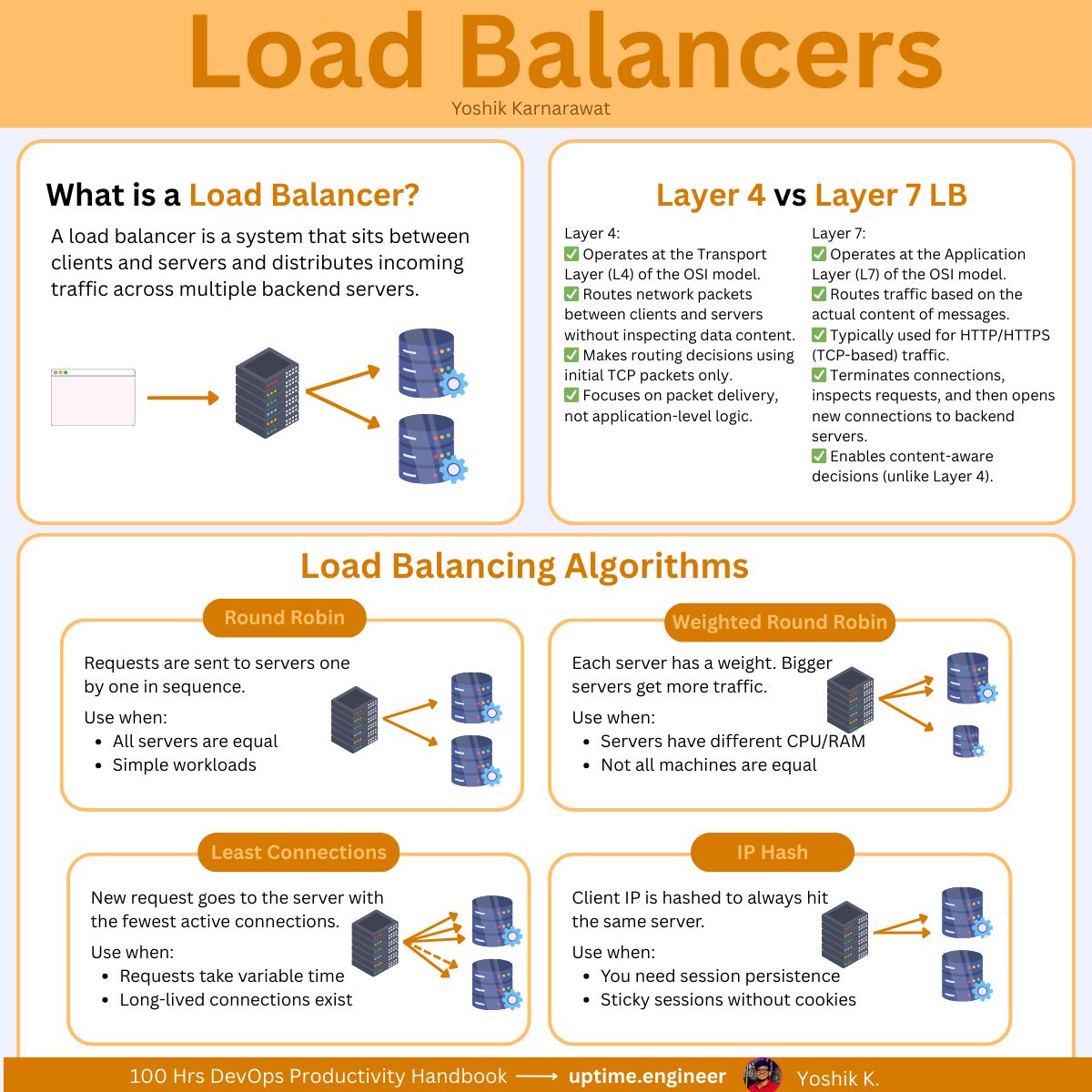

You'll learn why most 502s aren't backend failures, how health check timing creates 30-second failure windows, and why sticky sessions quietly destroy your autoscaling. By the end, you'll debug load balancer issues by tracing the request path instead of guessing, tune connection draining to match real traffic patterns, and know exactly when Layer 4 vs Layer 7 actually matters in production.

🔧 This Week's Command

kubectl get svc -o jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.type}{"\t"}{.status.loadBalancer.ingress[0].hostname}{"\n"}{end}'List all LoadBalancer services with their external endpoints in one shot. Essential for debugging ingress routing issues or confirming your cloud LB provisioning actually worked.

🔥Tool Spotlight

Cilium L4LB. eBPF-based load balancer that replaces kube-proxy for Kubernetes. 40% lower latency + native multi-cluster support. Works with AWS NLB/ALB seamlessly.

💼 Hot Jobs

DevSecOps Engineer @ Alpaca - Remote

Apply →

Backend Engineer, DevOps @ Pragmatike - Remote

AWS/GCP/Docker/Kubernetes/CICD

Apply →

Your load balancer returned a 502.

Backends are healthy. Health checks pass. Logs show nothing.

Most engineers start guessing at this point.

The issue is that load balancers handle three different jobs, and most teams only configure the basic routing part.

Layer 4 vs Layer 7

Your load balancer works at one of two layers.

Pick the wrong one and you either can't route correctly or you waste 10ms on every request for features you don't use.

Layer 4

Operates at TCP/UDP level.

Sees source IP, destination IP, and port numbers.

Doesn't parse HTTP.

Doesn't read paths, headers, or cookies.

Client connects → LB picks a backend → that connection stays pinned to that backend.

AWS NLB can handle millions of requests per second because it never looks inside your HTTP payload.

NLB also uses Direct Server Return requests go through the load balancer, but responses go directly from backend to client.

That's why NLB has static IPs and ALB doesn't. The backend needs to know where to send response packets without checking with the LB.

Where this breaks:

You build microservices with path routing (/api/v1 goes to Service A, /api/v2 goes to Service B).

Layer 4 can't see paths.

All your traffic hits one backend pool. Your routing doesn't work.

Layer 7

Terminates the TCP connection, parses HTTP, and routes based on content.

Can route by:

URL path

HTTP headers

Cookies

Request method

Flow: Client connects with TLS → LB terminates and decrypts → reads the HTTP request → picks a backend → opens new connection to backend → forwards request and response.

You trade some latency for routing flexibility.

AWS ALB uses DNS-based scaling instead of static IPs.

When traffic increases, AWS adds more ALB instances behind the same DNS name and updates your resolver with new IPs.

That's why you can't whitelist ALB IPs, they change when AWS autoscales the LB fleet.

NLB doesn't need this because it doesn't hold per-request state.

Health checks

The LB sends probe requests every few seconds to check if backends can handle traffic.

Most problems come from getting this configuration wrong.

What actually happens:

00:00 - Backend stops responding

00:10 - First health check fails

00:20 - Second health check fails

00:30 - Third check fails → LB marks backend unhealthy

00:30 - LB stops sending new traffic to this backend That's 30 seconds where users hit a broken backend.

Common settings:

Unhealthy threshold: 3 failures

Health check interval: 10 seconds

Many teams use interval = 5s, threshold = 2 to reduce that failure window.

But making health checks too aggressive creates different problems.

When health checks fail incorrectly

Your app serves most requests in 200ms.

Your health check endpoint queries the database, checks cache, and calls an external API.

That takes 450ms.

You set health check timeout to 500ms.

Under heavy load, health checks start timing out even though your app is still serving real user traffic fine.

LB marks backends unhealthy.

Remaining backends get even more load.

Their health checks start failing too.

The problem spreads.

Fix: Use separate health check endpoints for "is the process alive?" vs "can this instance accept traffic right now?".

Kubernetes has built-in liveness and readiness probes for this. Cloud load balancers need you to set it up manually.

Sticky sessions

Stateless apps don't need this.

But if you're storing session data in memory or you want cache locality, you need the same user to keep hitting the same backend.

Cookie-based:

LB creates a cookie on the first request.

Cookie contains an identifier for which backend to use (usually encrypted or hashed).

Future requests with that cookie go to the same backend.

The problem:

User A sends 1000 requests/min → stuck on Server 1.

Users B through Z send 10 requests/min each → distributed across Servers 2-5.

Server 1 is at 95% CPU. The rest are at 20%.

Your autoscaler sees "average CPU: 30%" and doesn't scale up.

Meanwhile Server 1 is melting.

IP hash:hash(client_ip) % num_backends = target server

This breaks when:

Multiple users share one IP (corporate NAT) → all hit the same backend

You scale from 3 to 4 backends → hash changes, all existing sessions break

Better approach:

Store sessions in Redis or Memcached.

Then any backend can serve any request.

Scaling works predictably.

When a backend dies, sessions survive.

Connection draining

You remove an instance or deploy new code.

Users still get connection errors.

This is usually connection draining (AWS calls it "deregistration delay").

When you remove a target, the LB keeps existing connections open for a set amount of time (default is often 300 seconds), then forcibly closes anything still open.

Set it too low: Users get cut off mid-request.

Set it too high: Deploys take forever, bad instances stay in rotation longer.

The LB is doing what you told it to do or what the default config says if you never changed it.

Match this to your actual traffic patterns:

Fast API calls: 30 seconds

File uploads: 180 seconds

Long-polling or streaming: 300+ seconds

TLS termination

Option 1: LB terminates TLSClient --TLS--> LB --HTTP--> Backend

Pros: Central cert management, backends don't handle encryption, LB can read content for routing.

Cons: Traffic inside your network is unencrypted.

Most cloud setups use this.

Option 2: TLS passthroughClient --TLS--> LB --TLS--> Backend

LB forwards encrypted traffic without decrypting it.

Pros: End-to-end encryption.

Cons: Layer 4 only (no path-based routing), every backend manages its own certs.

Option 3: Re-encryptClient --TLS--> LB (decrypt + re-encrypt) --TLS--> Backend

Pros: End-to-end encryption and content-based routing.

Cons: Double encryption overhead, complicated cert management.

AWS ALB supports this but most teams skip it because encrypting internal traffic rarely justifies the extra latency.

How to debug LB issues

Walk through the request path:

DNS: Does

dig your-lb-example(dot)comreturn the right IPs?LB listener: Does the listener and target group exist?

Health checks: Are the targets actually healthy? Check logs, don't trust the status display.

Target selection: Which algorithm is active? Are weights configured correctly?

Backend connectivity: Can the LB reach the backend? Check security groups and network ACLs.

Backend response: Is the backend timing out? Check backend logs, not just LB logs.

Return path: Is the response making it back? Look for connection resets or timeouts.

Most 502 errors happen at step 5 or 6.

Most people start debugging at step 3.

To summarise

Layer 4 is fast but can't see HTTP. Layer 7 adds latency but lets you route by content.

Health checks need to balance detection speed against false positives.

Sticky sessions cause uneven load distribution. Use external session storage when possible.

Connection draining needs to match your actual request durations.

Join 1,000+ engineers learning DevOps the hard way

Every week, I share:

How I'd approach problems differently (real projects, real mistakes)

Career moves that actually work (not LinkedIn motivational posts)

Technical deep-dives that change how you think about infrastructure

No fluff. No roadmaps. Just what works when you're building real systems.

👋 Find me on Twitter | Linkedin | Connect 1:1

Thank you for supporting this newsletter.

Y’all are the best.